Dynamic Multi-Team Racing: Competitive Driving on 1/10-th Scale Vehicles via Learning in Simulation

Abstract

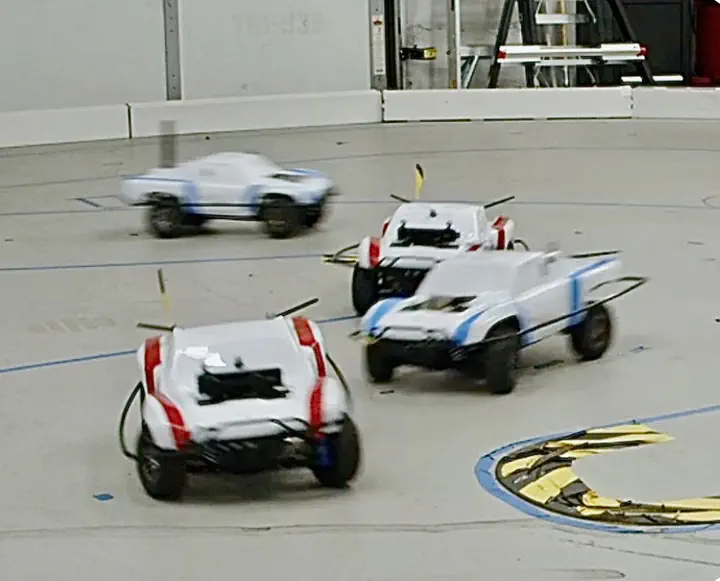

Autonomous racing is a challenging task that requires vehicle handling at the dynamic limits of friction. While single-agent scenarios like Time Trials are solved competitively with classical model-based or model-free feedback control, multi-agent wheel-to-wheel racing poses several challenges including planning over unknown opponent intentions as well as negotiating interactions under dynamic constraints. We propose to address these challenges via a learning-based approach that effectively combines model-based techniques, massively parallel simulation, and self-play reinforcement learning to enable zero-shot sim-to-real transfer of highly dynamic policies. We deploy our algorithm in wheel-to-wheel multi-agent races on scale hardware to demonstrate the efficacy of our approach. Several instances of these hardware races are provided in a supplementary video.